It is too early to write a full history of deep learning—and some of the details are contested—but we can already trace an admittedly incomplete outline of its origins and identify some of the pioneers. They include Warren McCulloch and Walter Pitts, who as early as 1943 proposed an artificial neuron (PDF–1.2MB), a computational model of the “nerve net” in the brain. Bernard Widrow and Ted Hoff at Stanford University, developed a neural-network application by reducing noise in phone lines in the late 1950s.

Around the same time, Frank Rosenblatt, an American psychologist, introduced the idea of a device called the Perceptron (PDF–1.55MB), which mimicked the neural structure of the brain and showed an ability to learn. MIT’s Marvin Minsky and Seymour Papert then put a damper on this research in their 1969 book Perceptrons by showing mathematically that the Perceptron could only perform very basic tasks. Their book also discussed the difficulty of training multilayer neural networks.

In 1986, Geoffrey Hinton at the University of Toronto, along with colleagues David Rumelhart and Ronald Williams, solved this training problem with the publication of a now famous back-propagation training algorithm—although some practitioners point to a Finnish mathematician, Seppo Linnainmaa, as having invented back-propagation already in the 1960s. Yann LeCun at New York University pioneered the use of neural networks on image-recognition tasks and his 1998 paper (PDF–430KB) defined the concept of convolutional neural networks, which mimic the human visual cortex. In parallel, John Hopfield popularized the “Hopfield” network (PDF–1.13MB), which was the first recurrent neural network. This was subsequently expanded upon by Jürgen Schmidhuber and Sepp Hochreiter in 1997 with the introduction of the long short-term memory (LSTM) (PDF–388KB), greatly improving the efficiency and practicality of recurrent neural networks. Hinton and two of his students in 2012 highlighted the power of deep learning (PDF–1.35MB) when they obtained significant results in the well-known ImageNet competition, based on a dataset collated by Fei-Fei Li and others. At the same time, Jeffrey Dean and Andrew Ng were doing breakthrough work on large-scale image recognition (PDF–263KB) at Google Brain.

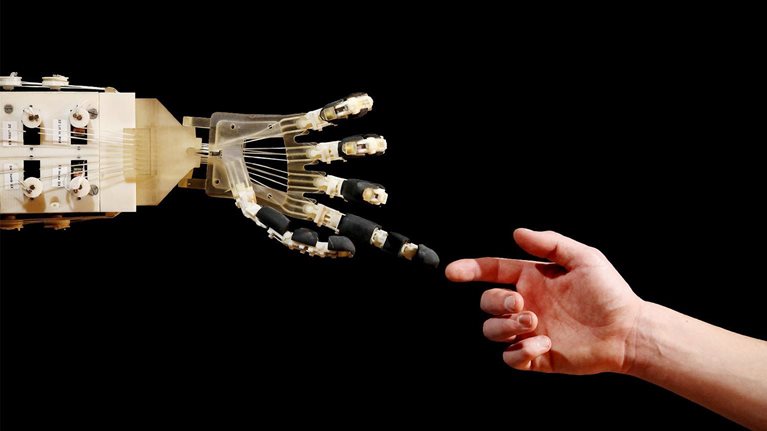

Visualizing the potential impact of AI and advanced analytics

Deep learning also enhanced the existing field of reinforcement learning, led by researchers such as Richard Sutton (PDF–3.96MB), leading to the game-playing successes of systems developed by DeepMind. In 2014, Ian Goodfellow published a paper on generative adversarial networks (PDF–527KB), which along with reinforcement learning has become the focus of much of the recent research in the field.

Continuing advances in artificial-intelligence (AI) capabilities have led to Stanford University’s One Hundred Year Study on Artificial Intelligence, founded by Eric Horvitz, building on the longstanding research he and his colleagues have led at Microsoft Research. We have benefited from the input and guidance of many of these pioneers in our research over the past few years.